Paraphrase Label Alignment for Voice Application Retrieval in Spoken Language Understanding

(longer introduction)

| Zheng Gao (Amazon, USA), Radhika Arava (Amazon, USA), Qian Hu (Amazon, USA), Xibin Gao (Amazon, USA), Thahir Mohamed (Amazon, USA), Wei Xiao (Amazon, USA), Mohamed AbdelHady (Amazon, USA) |

|---|

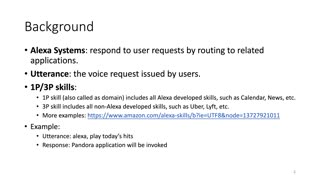

Spoken language understanding (SLU) smart assistants such as Amazon Alexa host hundreds of thousands of voice applications (skills) to delight end-users and fulfill their utterance requests. Sometimes utterances fail to be claimed by smart assistants due to system problems such as model incapability or routing errors. The failure may lead to customer frustration, dialog termination and eventually cause customer churn. To avoid this, we design a skill retrieval system as a downstream service to suggest fallback skills to unclaimed utterances. If the suggested skill satisfies customer intent, the conversation will be recovered with the assistant. For the sake of smooth customer experience, we only present the most relevant skill to customers, resulting in partial observation problem which constrains retrieval model training. To solve this problem, we propose a two-step approach to automatically align claimed utterance labels to unclaimed utterances. Extensive experiments on two real-world datasets demonstrate that our proposed model significantly outperforms a number of strong alternatives.