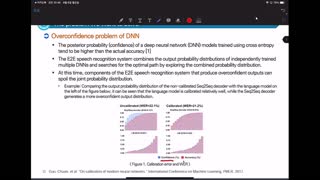

Deep neural network calibration for E2E speech recognition system

(3 minutes introduction)

| Mun-Hak Lee (Hanyang University, Korea), Joon-Hyuk Chang (Hanyang University, Korea) |

|---|

Cross-entropy loss, which is commonly used in deep-neural-network-based (DNN) classification model training, induces models to assign a high probability value to one class. Networks trained in this fashion tend to be overconfident, which causes a problem in the decoding process of the speech recognition system, as it uses the combined probability distribution of multiple independently trained networks. Overconfidence in neural networks can be quantified as a calibration error, which is the difference between the output probability of a model and the likelihood of obtaining an actual correct answer. We show that the deep-learning-based components of an end-to-end (E2E) speech recognition system with high classification accuracy contain calibration errors and quantify them using various calibration measures. In addition, it was experimentally shown that the calibration function, which was being trained to minimize calibration errors effectively mitigates those of the speech recognition system, and as a result, can improve the performance of beam-search during decoding.